Last year I published a blog post called ”You can have an Open Source Personal Assistant in 2024”. All the pieces are in place for a home voice assistant that could help you with various tasks and interact with things in the real world. I want a private, local voice assistant I can speak to in a natural, conversant way. It should be able to answer my family’s random questions and maybe integrate with a few things in my house. Home Assistant, the popular open source home automation project, along with Ollama, LLM’s running on your own hardware, made it real!

I’m going to set up Home Assistant on a mac mini and run a few tests, comparing a few things to open AI’s voice assistant. I’ll record my test with some background in case you would like to view below. I have my son helping me out/taking naps so get ready to rock with him in the video.

Rough Notes on Steps

1.) Download Virtual Box set up a custom VDI because the

default vdmk was not big enough to install piper and whisper. I used the CLI to

convert to vdi because modifying the size of the vdmk doesn’t work and HA will

fail to load.

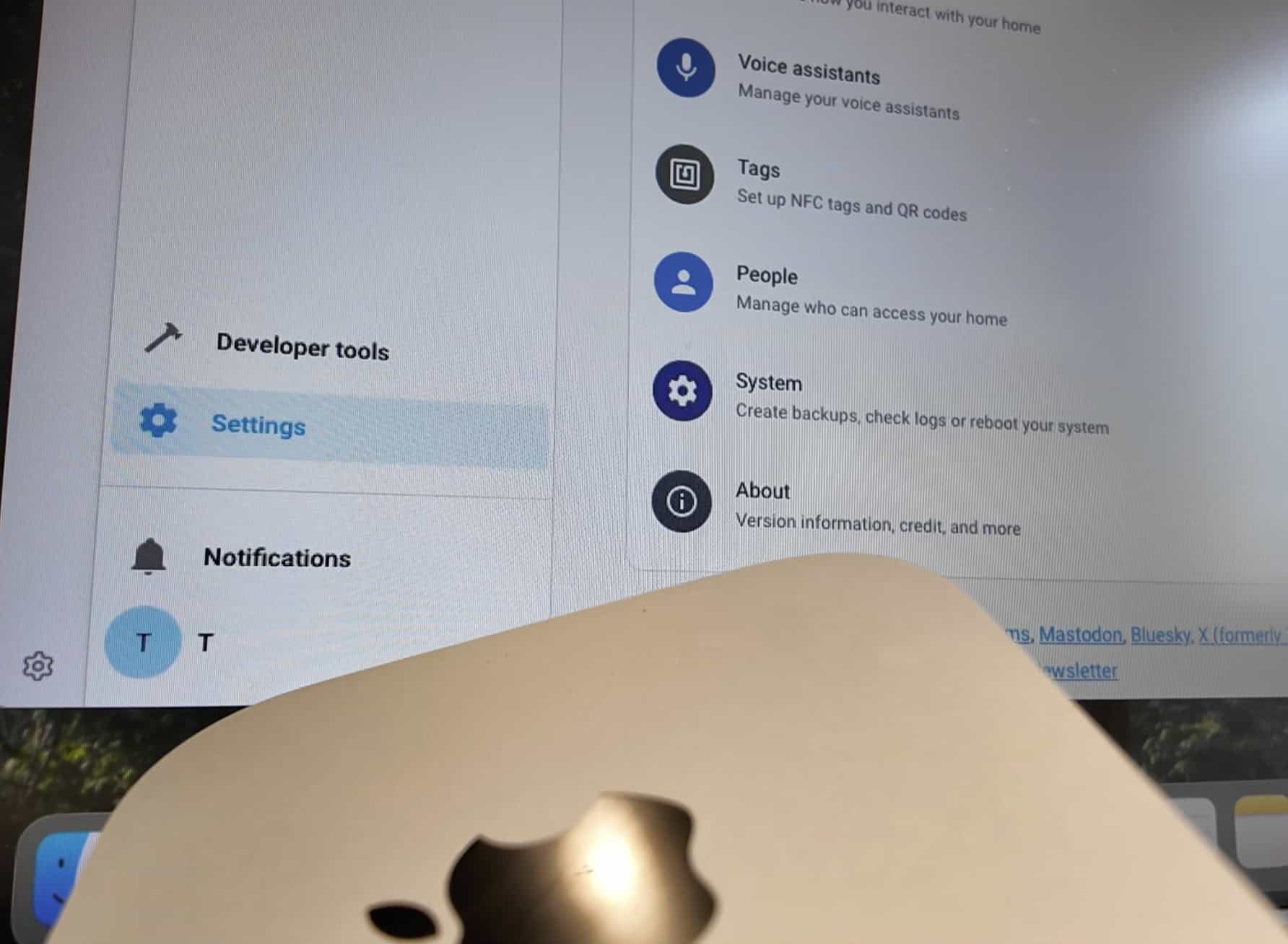

2.) Configure HA and set up Voice Assistant with preview edition.

3.) Settings —> Add-ons —> tried changing the config but the results I got

were worse than auto for every model I tried. I wish there was a way to know

what ‘auto’ was doing.

4.) Noticed the time when asking my voice assistant was

incorrect despite system settings correctly set to my time zone. I opened the HA

app and checked the system settings to confirm and I eventually got the correct

time.

5.) Next challenge was configuring my Ollama server to run on my local

network on the mac mini. Solution I tried via their FAQ:

https://github.com/ollama/ollama/blob/main/docs/faq.md#setting-environment-variables-on-mac

6.) I had to get my machines IP and use http://YOUR-IP-ADDRESS:11434 to get

things working after setting my host with the command below.

7.) Gemma3:latest

didn’t work for me but got things working with llama3.2

Helpful Commands:

brew services restart ollama

launchctl setenv OLLAMA_HOST "0.0.0.0:11434"

VboxManage modifymedium disk "new.vdi" --resize 32768

Wish List:

Look into running my own TTS service for more expressive voice. With something like: https://github.com/canopyai/Orpheus-TTS

Use a better model than Llama 3. Gemma3 seems to need some configs.

Look for ways to improve response time/latency.

Multilingual support - we speak Mandarin in the house so it would be nice to have an assistant who can speak both Mandarin and English.

Integrate more of my home with the device. (the parts that might make sense… I don’t want an LLM to have control over anything important ATM)

Maybe develop a way to ‘wake up’ the Ollama server only when we need it and use another dumber agent for things like kitchen timer and basic commands. This will save resources and energy.