I wanted to see if I could run llamafile, which lets you distribute and run LLMs with a single file, on a Raspberry Pi 5. I hope I can use my own custom front-end to interact with the model in different ways. I have a few ideas but want to see if I can get it running first.

Is it fast?

Yes, using a small model is no problem. Generates fast enough to be usable but slower than popular commercial chatbots at this time.

How good are the responses?

Not great in my tests but also not bad in some responses. I would like to experiment more to see what can be done with this.

Notes

Here are the steps I took to get it working, welcome any tips as I am new to many aspects of this:

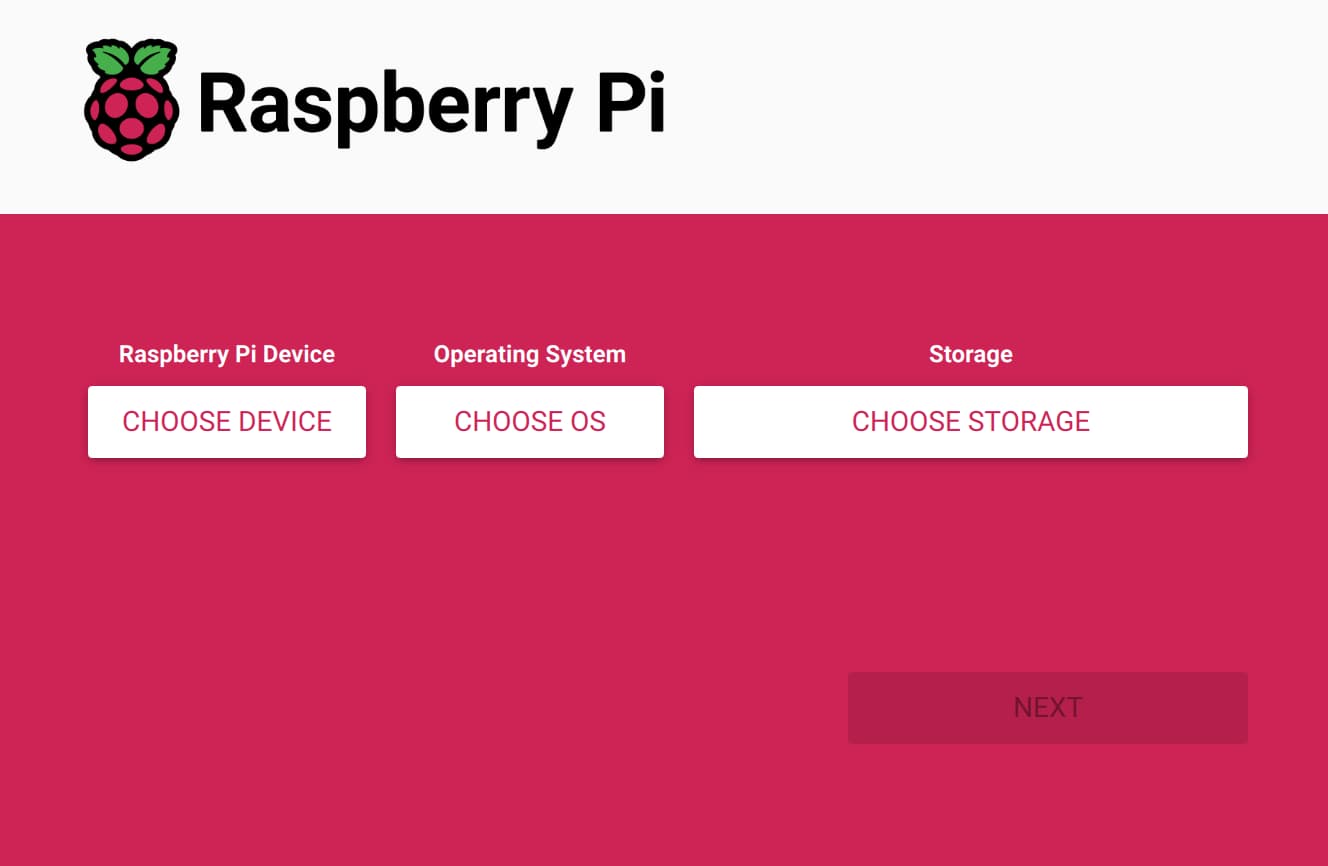

1: Pi OS setup

I used Raspberry Pi Imager to easily install Raspberry Pi OS lite, you can then ssh into, then updated.

sudo apt update; sudo apt full-upgrade -y2: Install Required Packages

Couldn’t get things running without the virtual env. I installed the following packages:

build-essential, wget, curl, unzip, and python3-venv

sudo apt install build-essential wget curl unzip python3-venv -y3: Create a Virtual Environment

Create and activate a virtual environment.

python3 -m venv myenv

source myenv/bin/activate4: Install Hugging Face CLI

Install the Hugging Face CLI within the virtual environment:

pip install -U huggingface_hub[cli]5: Login to Hugging Face

Log in to Hugging Face using the CLI:

huggingface-cli loginEnter your read access token when prompted.

6: Download the TinyLlama Model

Try whatever model you would like. First I tried a larger one and it was too slow. TinyLlama runs quick on RP5.

wget --header="Authorization: Bearer <YOUR_HUGGINGFACE_TOKEN>" -O TinyLlama-1.1B-Chat-v1.0.Q8_0.llamafile https://huggingface.co/Mozilla/TinyLlama-1.1B-Chat-v1.0-llamafile/resolve/main/TinyLlama-1.1B-Chat-v1.0.Q8_0.llamafileYou can get a access token from your Hugging Face account settings. Use the read token for this purpose. You wont need to add to git credentials.

7: Grant Execution Permissions

Make the downloaded LlamaFile executable:

chmod +x TinyLlama-1.1B-Chat-v1.0.Q8_0.llamafile8: Run the LlamaFile Server

Start the LlamaFile server

./TinyLlama-1.1B-Chat-v1.0.Q8_0.llamafile --host 0.0.0.0 --no-browser9: Pi should be running a webserver

Open a web browser on another device connected to the same local network and navigate to:

http://<your_raspberry_pi_ip>:8080